8.2 KiB

Lab 3

Prep

- Gitea set up

- MFA set up

- Add git ignore

- Secrets/Token Management

- Consider secret-scanning

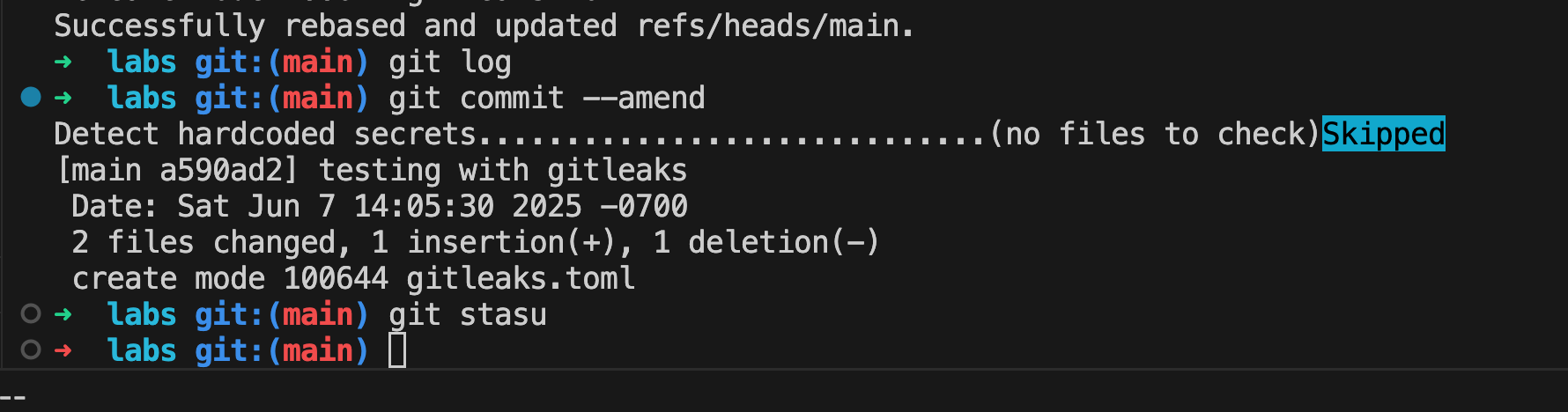

- Added git-leaks on pre-commit hook

- Consider secret-scanning

- Create & Connect to a Git repository

- Modify and make a second commit

- Test to see if gitea actions works

- Have an existing s3 bucket

Resources

- Capital One Data Breach

- Grant IAM User Access to Only One S3 Bucket

- IAM Bucket Policies

- Dumping S3 Buckets!

Lab

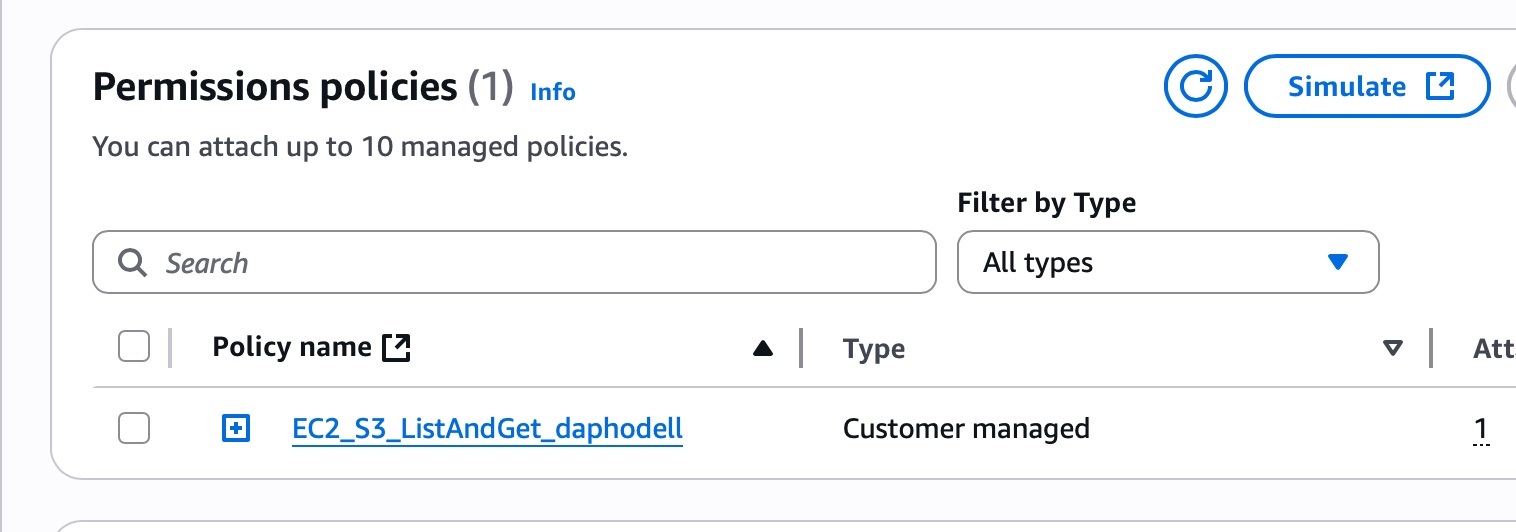

- create a custom IAM Policy

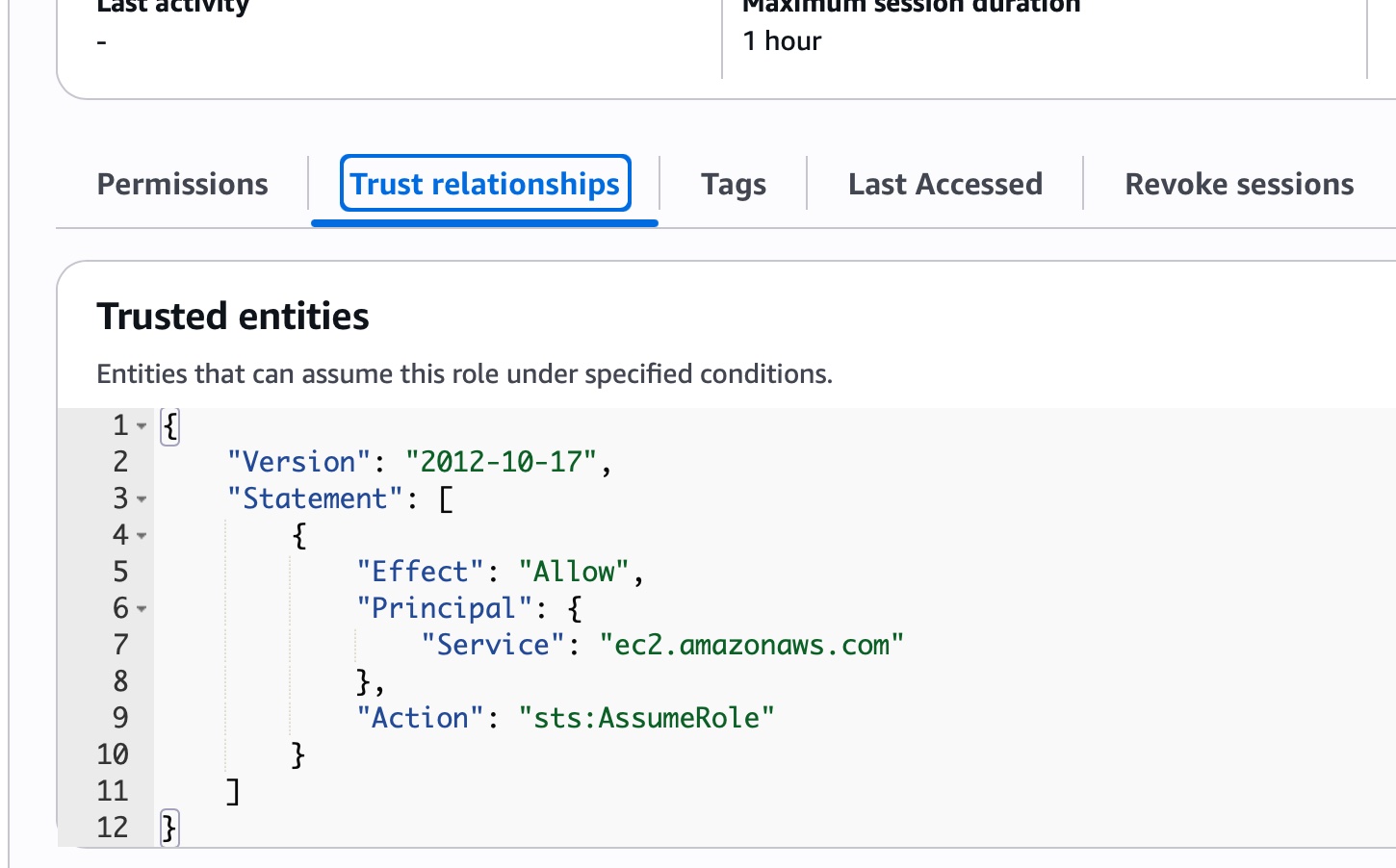

- create an IAM Role for EC2

- Attach the Role to your EC2 Instance

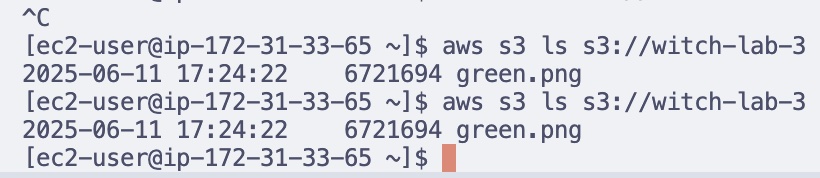

- Verify is3 access from the EC2 Instance

- HTTPS outbound was not set up

- I did not check outbound rules (even when the lab explicitly called this out) because it mentioned lab 2, so my assumption was that it had already been set up (it was not). When connection to s3 failed I double checked lab 3 instructions

- HTTPS outbound was not set up

Stretch

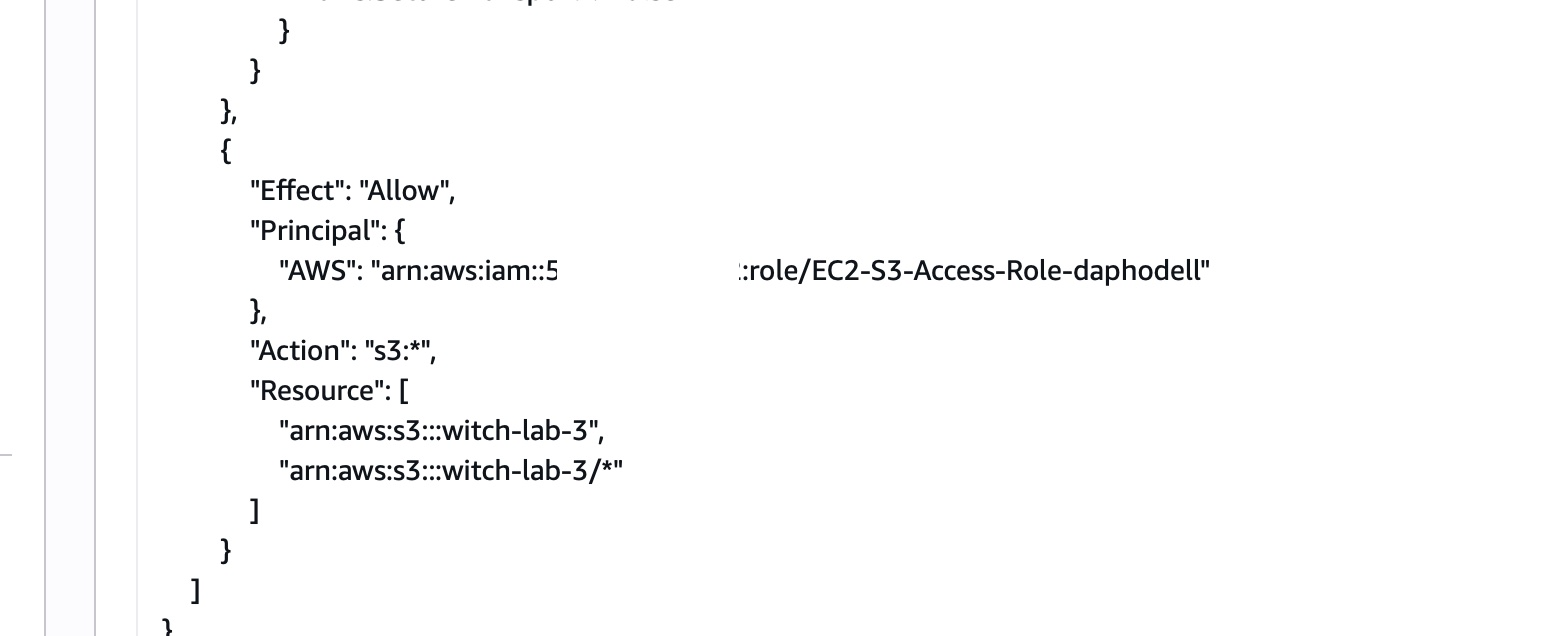

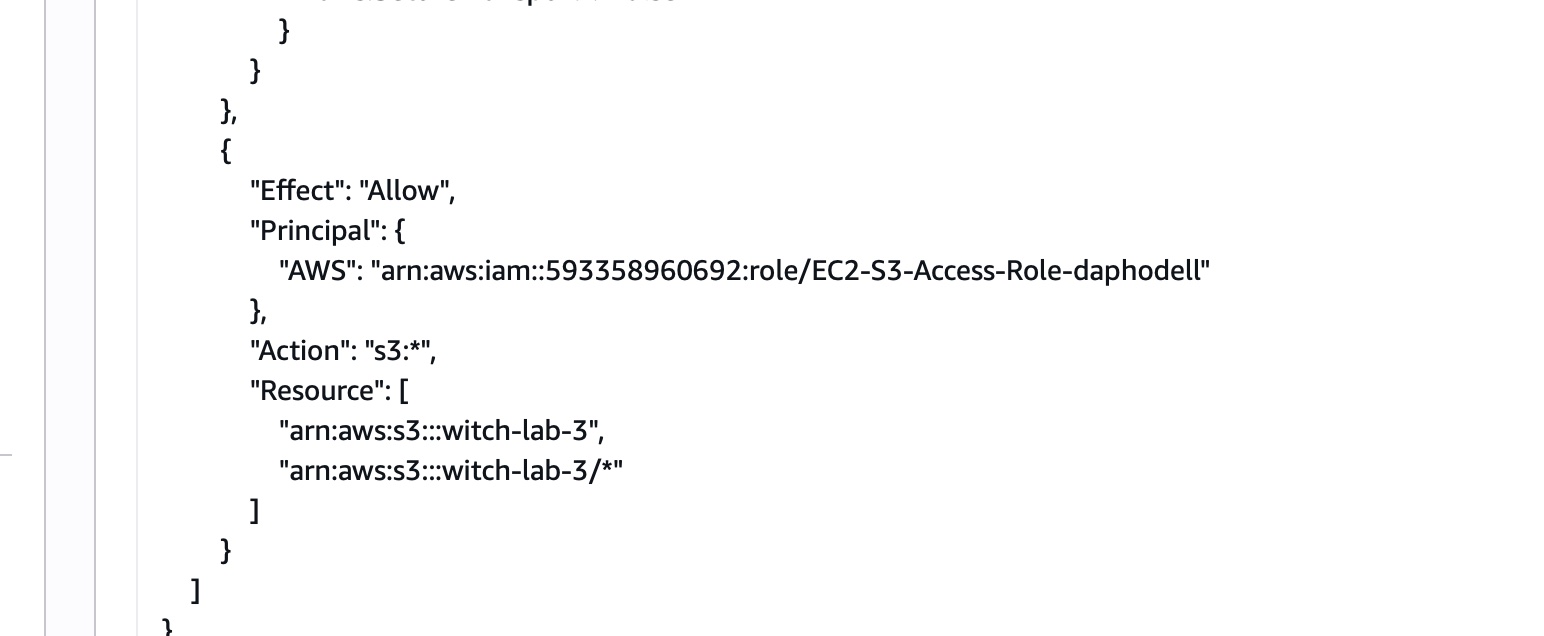

- Create a bucket policy that blocks all public access but allows your IAM role

- Implmented: guide

<<<<<<< HEAD

1437cee (Add resume pdf & html)

- Experiment with requiring MFA or VPC conditions.

- MFA conditions

- MFA did not work out of the box after setting it in the s3 bucket policy. The ways I found you can configure MFA:

- stackoverflow

- official guide

- via cli roles - I set up a new set of role-trust relationships.

- Update s3 Role:

- Update action: sts:assumerole

- Update principle (for user -- could not target group)

- Add condition (MFA bool must be true)

- Update s3 Role:

- Commands referenced: I set up a script that looks like this

MFA_TOKEN=$1

if [ -z "$1" ]; then

echo "Error: Run with MFA token!"

exit 1

fi

if [ -z $BW_AWS_ACCOUNT_SECRET_ID ]; then

echo "env var BW_AWS_ACCOUNT_SECRET_ID must be set!"

exit 1

fi

AWS_SECRETS=$(bw get item $BW_AWS_ACCOUNT_SECRET_ID)

export AWS_ACCESS_KEY_ID=$(echo "$AWS_SECRETS" | jq -r '.fields[0].value')

export AWS_SECRET_ACCESS_KEY=$(echo "$AWS_SECRETS" | jq '.fields[1].value' | tr -d '"')

SESSION_OUTPUT=$(aws sts assume-role --role-arn $S3_ROLE --role-session-name $SESSION_TYPE --serial-number $MFA_IDENTIFIER --token-code $MFA_TOKEN)

#echo $SESSION_OUTPUT

export AWS_SESSION_TOKEN=$(echo "$SESSION_OUTPUT" | jq '.Credentials.SessionToken' | tr -d '"')

export AWS_ACCESS_KEY_ID=$(echo "$SESSION_OUTPUT" | jq '.Credentials.AccessKeyId' | tr -d '"')

export AWS_SECRET_ACCESS_KEY=$(echo "$SESSION_OUTPUT" | jq '.Credentials.SecretAccessKey' | tr -d '"')

#echo $AWS_SESSION_TOKEN

#echo $AWS_ACCESS_KEY_ID

#echo $AWS_SECRET_ACCESS_KEY

aws s3 ls s3://witch-lab-3

- configuration via ~/.aws/credentials

- 1Password CLI with AWS Plugin

- I use bitwarden, which also has an AWS Plugin

- I've seen a lot more recommendations (TBH it's more like 2 vs 0) for 1password for password credential setup. Wonder why?

- Host a static site

- Enable a static website hosting (

index.html) - Configure route 53 alias or CNAME for

resume.<yourdomain>to the bucket endpoint. - Deploy CloudFront with ACM certificate for HTTPS

- see: resume <<<<<<< HEAD

- Enable a static website hosting (

- Private "Invite-Only" Resume Hosting

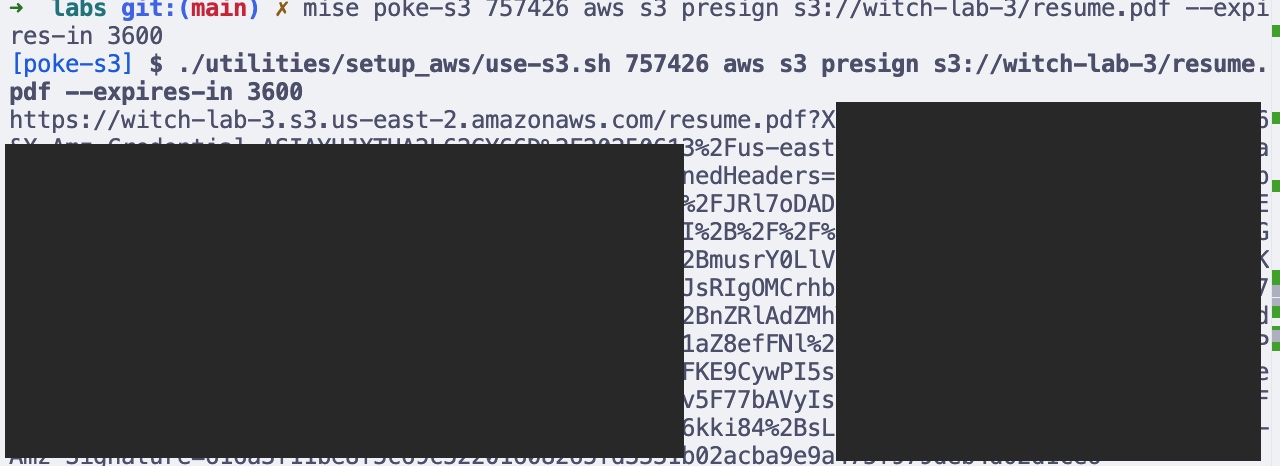

- Pre-signed URLs

aws s3 presign s3://<YOUR_BUCKET_NAME>/resume.pdf --expires-in 3600=======

- Cloudflare Edge Certificate -> Cloudfront -> S3 Bucket

- In this step, I disabled "static website hosting" on the s3 bucket

- This was actually maddening to set up. For reasons I can't understand even after Google Searching and ChatGPTing, my s3 bucket is under us-east-2 and Cloudfront kept redirecting me to the us-east-1 for some reason. I don't like switching up regions under AWS because this way it's easy to forget what region you created a specific service in because they're hidden depending on what region is active at the moment.

- Pre-signed URLs

Private "Invite-Only" Resume Hosting

- Pre-signed URLs

aws s3 presign s3://<YOUR_BUCKET_NAME>/resume.pdf --expires-in 3600(see: presigned url screenshot)

1437cee (Add resume pdf & html)

Further Exploration

- Snapshots & AMIs

- Create an EBS snapshot of

/dev/xvda - Register/create an AMI from that snapshot

-

How do you "version" a server with snapshots? Why is this useful? Cattle, not pets This is useful for following the concept for treating your servers as "cattle, not pets". Being able to keep versioned snapshots of your machines means there's nothing special about your currently running server. If it goes down (or you need to shoot it down), you can restore it on another machine from an older snapshot.

Or if you needed to suddenly scale your operation from 1 machine to many, where each machine needed the exact same configuration set as the other (all need fail2ban installed, etc. etc,) -- you can do that with an AMI image.

-

- Launch a new instance from your AMI

- Create an EBS snapshot of

- Linux & Security Tooling

ss -tulpn,lsof,auditctlto inspect services and audit- Install & run:

- nmap localhost

- tcpdump - c 20 -ni eth0

- lynis audit system

- fail2ban-client status

- OSSEC/Wazuh or ClamAV

- Scripting & Automation

- Bash: report world-writable files

- Python with boto3: list snapshots, start/stop instances

- Convert to terraform

- IAM Role

- IAM Policy

- IAM Group

- EC2 Instance

- S3 Bucket

Further Reading

Reflection

-

What I built

- A secured s3 bucket for secure content that can only be accessed via multi-factor authentication Good for storing particularly sensitive information.

- A minimal HTML website served from an S3 bucket

-

Challenges

- The stretch goal for setting up s3 + mfa was a bit of a pain:

- Groups cannot be used as the principal in a trust relationship, breaking my mental model of the ideal way to onboard/offboard engineers by simply removing them from groups (although I may have set up the IAM permissions in an inefficient way. I ended up having to assign a user as the principal of the trust relationship for my s3 role.)

- Issues between setting up Cloudflare -> CloudFront -> s3 bucket

- I think adding an extra service (Cloudflare, where I host my domain) added a little bit of complexity, though my main issue was figuring out how to set up the ACM cert -> CloudFront distribution -> S3. Most of the instructions I was able to parse through with ChatGPT -- I have to say I had a much better reading through those instructions than with the official AWS docs, which led me through nested links (understandably, because there seem to be multiple ways of doing everything).

- The stretch goal for setting up s3 + mfa was a bit of a pain:

-

Security concerns On scale and security at scale

Terms

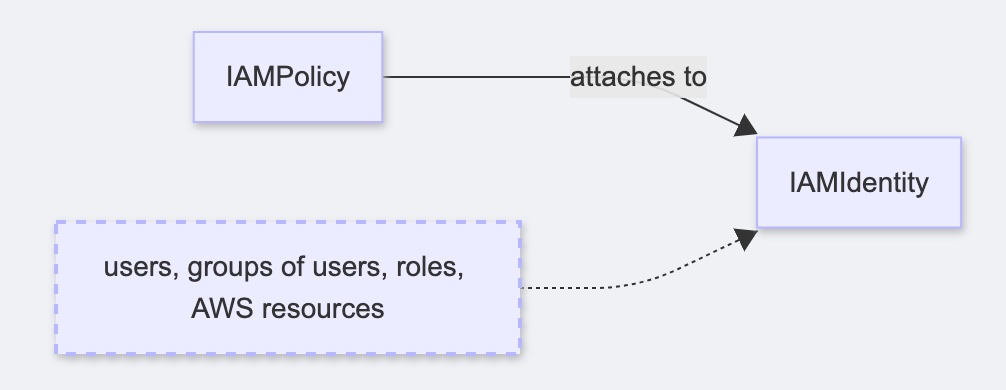

Identity Access Management

graph LR

IAMPolicy -- attaches to --> IAMIdentity

ExplainIAMIdentity[users, groups of users, roles, AWS resources]:::aside

ExplainIAMIdentity -.-> IAMIdentity

classDef aside stroke-dasharray: 5 5, stroke-width:2px;